This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Community Hub.

ANF Backup for SAP Solutions

Introduction

Azure NetApp Files backup expands the data protection capabilities of Azure NetApp Files by providing fully managed backup solution for long-term recovery, archive, and compliance. Backups created by the service are stored in Azure storage, independent of volume snapshots that are available for near-term recovery or cloning. Backups taken by the service can be restored to new Azure NetApp Files volumes within the same Azure region. Azure NetApp Files backup supports both policy-based (scheduled) backups and manual (on-demand) backups. For additional information, see https://learn.microsoft.com/en-us/azure/azure-netapp-files/snapshots-introduction

To start with please read: Understand Azure NetApp Files backup | Microsoft Learn

ANF Resource limits: Resource limits for Azure NetApp Files | Microsoft Learn

IMPORTANT: Azure NetApp Files backup is in public preview state and not yet a general available Azure service. For other restrictions and conditions read the article Requirements and Considerations for Azure NetApp Files backup.

IMPORTANT: All scripts shown in this blog are demonstration scripts that Microsoft is not providing support or any other liabilities for. The scripts can be used and modified for individual use cases.

Design

The four big benefits of ANF backup are:

- Inline compression when taking a backup.

- De-Duplication – this will reduce the amount of storage needed in the Blob space.

- Block level Delta copy of the blocks – this will the time and the space for each backup

- The database server is not impacted when taking the backup. All traffic will go directly from the storage to the blob space using the Microsoft backbone and NOT the client network. The backup will also NOT impact the storage volume quota. The database server will have the full bandwidth available for normal operation.

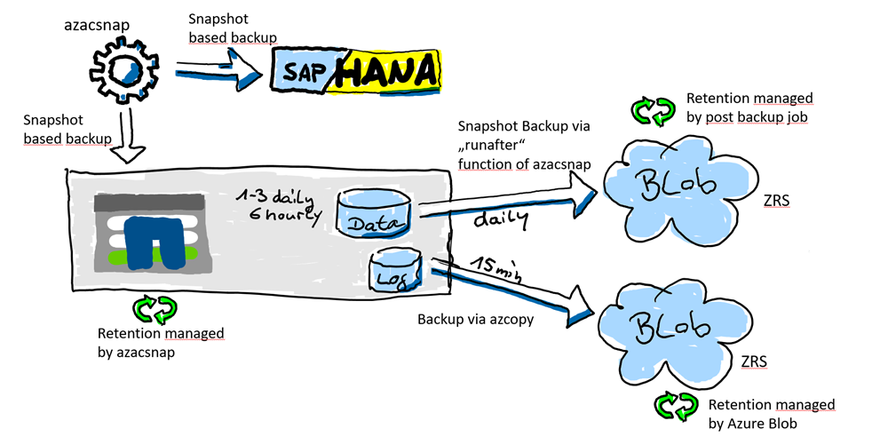

How it will work

We now split up the backup features in two parts. The data volume will be snapshotted with azacsnap. Here it is important that the data volume is is a consistent state before we take a snapshot. This will be managed with azacsnap.

The log backup area is a “offline” volume and can be backed up anytime without talking to the database. We also need a much higher backup frequency to reduce the RPO as for the data volume. The database can be “rolled forward” with any data snapshot if you have all the logs created after this data volume snapshot. Therefore, the frequency of how often we backup the log backup folder is very important to reduce the RPO. For the log backup volume we do not need a snapshot at all because, as I mentioned, all the files there are offline files.

Setup

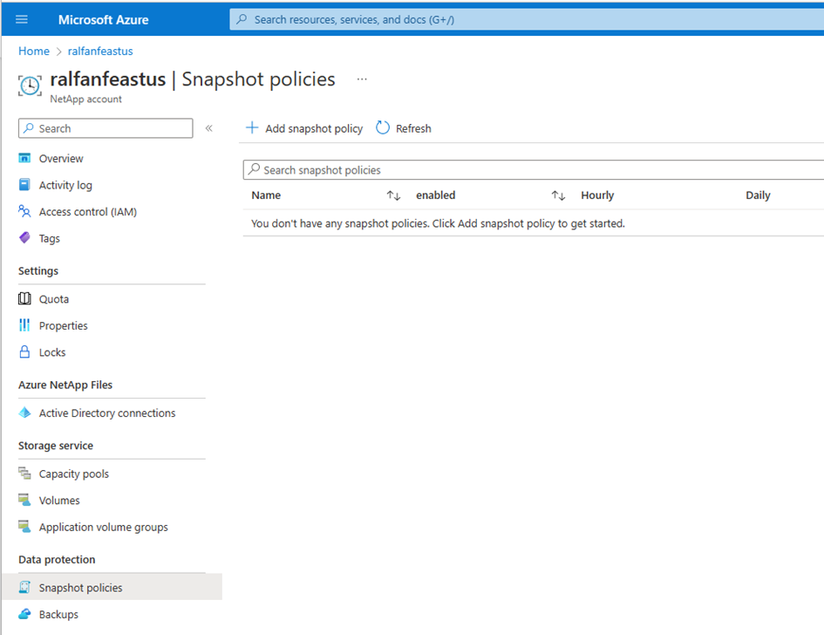

Create the Snapshot- Policy

The first thing you need to do is create a snapshot policy. This will not be used at all, but the ANF Backup workflow requires it. The intent is to create a manual call to backup a created snapshot created by azacsnap (application consistent).

Add a Snapshot Policy

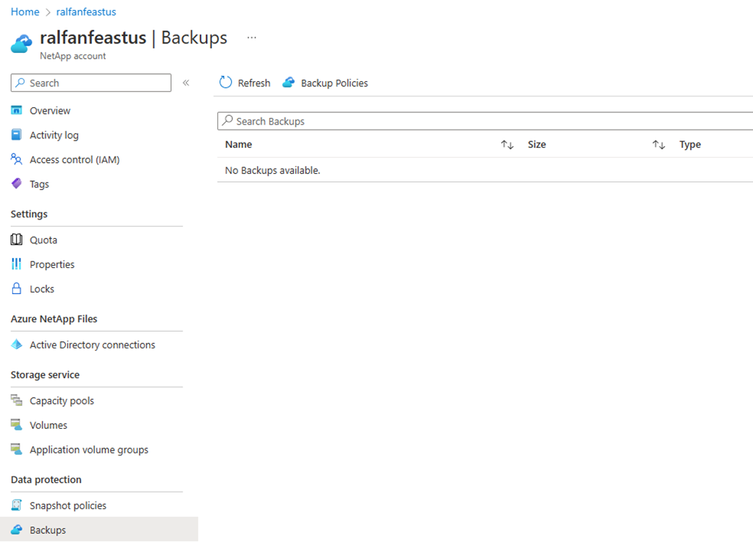

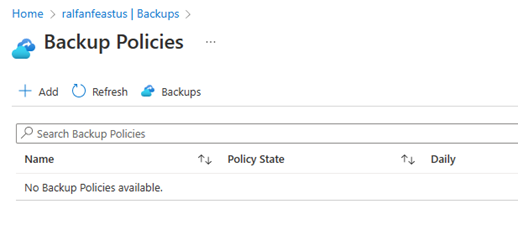

Create the Backup Policy

After this we need to create a Backup policy.

create a Backup Policies

Install azacsnap

Download and install azacsnap

Download the tool from the Microsoft site https://aka.ms/azacsnapinstaller

More information about azacsnap is located under What is Azure Application Consistent Snapshot tool for Azure NetApp Files | Microsoft Learn

Login to the VM and install the tool:

cd /tmp

ls -l azacsnap_7_\(1A8FDFF\)_installer.run

-rw-r--r-- 16476174 Jan 11 13:50 azacsnap_7_(1A8FDFF)_installer.run

chmod 755 azacsnap_7_\(1A8FDFF\)_installer.run

./azacsnap_7_\(1A8FDFF\)_installer.run -I

...

..

+-------------------------------------------------------------+

| Install complete! Follow the steps below to configure.

+-------------------------------------------------------------+

Change into the snapshot user account.....

su - azacsnap

Setup the secure credential store for database access.....

a. SAP HANA - setup the HANA Secure User Store with hdbuserstore (refer to documentation)

b. Oracle DB - setup the Oracle Wallet (refer to documentation)

c. Db2 - setup for local or remote connectivity (refer to documentation)

Change to location of commands.....

cd /home/azacsnap/bin/

Create a configuration file.....

azacsnap -c configure --configuration new

Test the connection to storage.....

azacsnap -c test --test storage

Test the connection to database.....

a. SAP HANA .....

1. without SSL

azacsnap -c test --test hana

2. with SSL, you will need to choose the correct SSL option

azacsnap -c test --test hana --ssl=<commoncrypto|openssl>

b. OracleDB .....

azacsnap -c test --test oracle

c. Db2 .....

azacsnap -c test --test db2

Run your first snapshot backup..... (example below)

azacsnap -c backup --volume=data --prefix=db_snapshot_test --retention=1

Start the cloud shell in your Azure portal and create the service principal for your Azure Subscription.

Install the Azure Application Consistent Snapshot tool for Azure NetApp Files | Microsoft Learn

az ad sp create-for-rbac --name "AzAcSnap" --role Contributor --scopes /subscriptions/**** --sdk-auth

**** is your subscription-ID

Store the output in a file called auth.json.

change to the azacsnap user and create and test the environment.

su - azacsnap

cd bin

vi auth.json

auth.json

{

"clientId": "***************",

"clientSecret": "***************",

"subscriptionId": "***************",

"tenantId": "***************",

"activeDirectoryEndpointUrl": "https://login.microsoftonline.com",

"resourceManagerEndpointUrl": "https://management.azure.com/",

"activeDirectoryGraphResourceId": "https://graph.windows.net/",

"sqlManagementEndpointUrl": "https://management.core.windows.net:8443/",

"galleryEndpointUrl": "https://gallery.azure.com/",

"managementEndpointUrl": "https://management.core.windows.net/"

}

Create the azacsnap config

azacsnap -c configure --configuration=new

Building new config file

Add comment to config file (blank entry to exit adding comments): ANF Backup Test

Add comment to config file (blank entry to exit adding comments):

Enter the database type to add, 'hana', 'oracle', 'db2', or 'exit' (to save and exit): hana

=== Add SAP HANA Database details ===

HANA SID (e.g. H80): ANA

HANA Instance Number (e.g. 00): 00

HANA HDB User Store Key (e.g. `hdbuserstore List`): AZACSNAP

HANA Server's Address (hostname or IP address): ralfvm01

Do you need AzAcSnap to automatically disable/enable backint during snapshot? (y/n) [n]:

=== Azure NetApp Files Storage details ===

Are you using Azure NetApp Files for the database? (y/n) [n]: y

Enter new value for 'ANF Backup (none, renameOnly)' (current = 'none'): none

--- DATA Volumes have the Application put into a consistent state before they are snapshot ---

Add Azure NetApp Files resource to DATA Volume section of Database configuration? (y/n) [n]: y

Full Azure NetApp Files Storage Volume Resource ID (e.g. /subscriptions/.../resourceGroups/.../providers/Microsoft.NetApp/netAppAccounts/.../capacityPools/Premium/volumes/...): /subscriptions/**********/resourceGroups/ralf_rg/providers/Microsoft.NetApp/netAppAccounts/ralf**/capacityPools/ralfanfultra/volumes/ralfANAdata

Service Principal Authentication filename or Azure Key Vault Resource ID (e.g. auth-file.json or https://...): auth.json

Add Azure NetApp Files resource to DATA Volume section of Database configuration? (y/n) [n]:

--- OTHER Volumes are snapshot immediately without preparing any application for snapshot ---

Add Azure NetApp Files resource to OTHER Volume section of Database configuration? (y/n) [n]: y

Full Azure NetApp Files Storage Volume Resource ID (e.g. /subscriptions/.../resourceGroups/.../providers/Microsoft.NetApp/netAppAccounts/.../capacityPools/Premium/volumes/...): /subscriptions/***********/resourceGroups/ralf_rg/providers/Microsoft.NetApp/netAppAccounts/ralf**/capacityPools/ralfanfprem/volumes/ralfANAshared

Service Principal Authentication filename or Azure Key Vault Resource ID (e.g. auth-file.json or https://...): auth.json

Add Azure NetApp Files resource to OTHER Volume section of Database configuration? (y/n) [n]:

=== Azure Managed Disk details ===Are you using Azure Managed Disks for the database? (y/n) [n]:

=== Azure Large Instance (Bare Metal) Storage details ===

Are you using Azure Large Instance (Bare Metal) for the database? (y/n) [n]:

Enter the database type to add, 'hana', 'oracle', 'db2', or 'exit' (to save and exit): exit

Editing configuration complete, writing output to 'azacsnap.json'.

Create the hdbuserstore key for azacsnap

hdbuserstore set AZACSNAP ralfvm01:30013 system <password>

Test the storage connection

azacsnap -c test --test=storage

BEGIN : Test process started for 'storage'

BEGIN : Storage test snapshots on 'data' volumes

BEGIN : Test Snapshots for Storage Volume Type 'data'

PASSED: Storage test completed successfully for all 'data' Volumes

BEGIN : Storage test snapshots on 'other' volumes

BEGIN : Test Snapshots for Storage Volume Type 'other'

PASSED: Storage test completed successfully for all 'other' Volumes

END : Storage tests complete

END : Test process complete for 'storage'

Test the DB connection

azacsnap -c test --test=hana

BEGIN : Test process started for 'hana'

BEGIN : SAP HANA tests

PASSED: Successful connectivity to HANA version 2.00.066.00.16710961

END : Test process complete for 'hana'

Create the first snapshot on the data volume

azacsnap -c backup --volume=data --prefix=Test --retention=3

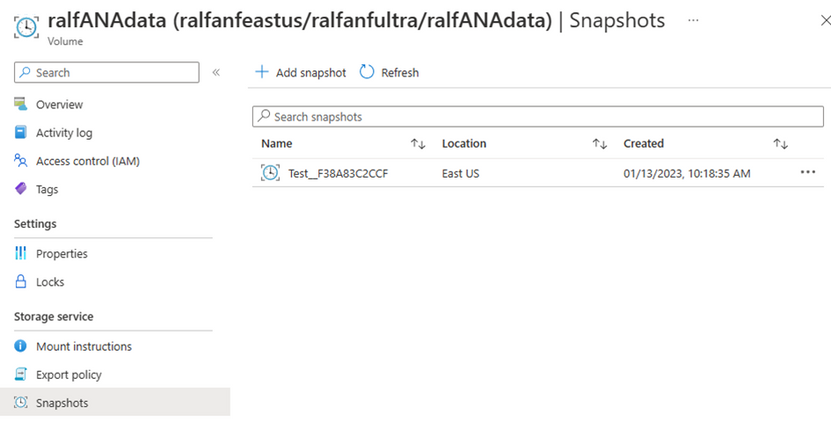

Check the portal if the snapshot is visible.

Create the first snapshot on the shared volume

azacsnap -c backup --volume=other --prefix=Test --retention=3

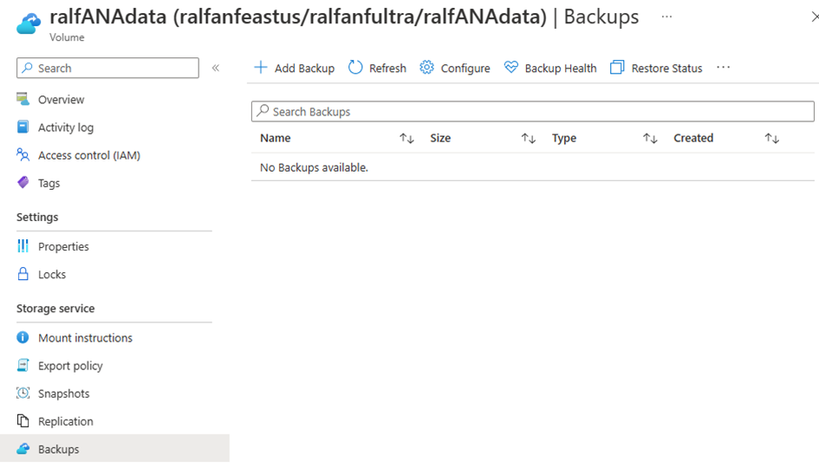

Now configure the data volume for ANF Backup

Starting the SnapShot backup – using the just created SnapShot. This in a “normal” environment would be the “daily” database snapshot.

The initial backup will run for, sometimes, several hours, depending on the database size.

The big advantage after this initial copy is, that every other upcoming backup will “only” be a delta copy of the changed database blocks.

Azure CLI

To automate the snapshot creation and immediately starting the ANF backup of the snapshot NetApp developed a script which should help you set this up.

Those scripts are without support.

Prerequisite is a working azure-cli :smiling_face_with_smiling_eyes:

This azure-cli must be installed as azacsnap user.

To do this please run those commands:

As root:

zypper refresh && zypper --non-interactive install curl gcc libffi-devel python3-devel libopenssl-devel

as azacsnap:

pip install --upgrade pip

curl -L https://aka.ms/InstallAzureCli | bash

...

..

===> In what directory would you like to place the install? (leave blank to use '/home/azacsnap/lib/azure-cli'):-- Creating directory '/home/azacsnap/lib/azure-cli'.

-- We will install at '/home/azacsnap/lib/azure-cli'.

===> In what directory would you like to place the 'az' executable? (leave blank to use '/home/azacsnap/bin'):

-- The executable will be in '/home/azacsnap/bin'.

...

..

Test the az command by using the version request. Version 2.39 is absolutely okay for us, no need to upgrade.

az --version

azure-cli 2.39.0 *

core 2.39.0 *

telemetry 1.0.6 *

Dependencies:

msal 1.18.0b1

azure-mgmt-resource 21.1.0b1

Python location '/home/azacsnap/lib/azure-cli/bin/python'

Extensions directory '/home/azacsnap/.azure/cliextensions'

Python (Linux) 3.6.15 (default, Sep 23 2021, 15:41:43) [GCC]

Legal docs and information: aka.ms/AzureCliLegal

You have 3 updates available. Consider updating your CLI installation with 'az upgrade'

Please let us know how we are doing: https://aka.ms/azureclihats

and let us know if you're interested in trying out our newest features: https://aka.ms/CLIUXstudy

To sign in, use a web browser to open the page. This login will last for 6 month. A better solution is to work with managed identities. But for this test the az loging is good enough for now.

Use managed identities on an Azure VM for sign-in - Azure ADV - Microsoft Entra | Microsoft Learn

az login

/home/azacsnap/lib/azure-cli/lib/python3.6/site-packages/azure/cli/command_modules/iot/_utils.py:9: CryptographyDeprecationWarning: Python 3.6 is no longer supported by the Python core team. Therefore, support for it is deprecated in cryptography. The next release of cryptography (40.0) will be the last to support Python 3.6.

from cryptography import x509

To sign in, use a web browser to open the page https://microsoft.com/devicelogin and enter the code 4711-0815 to authenticate.

[

{

"cloudName": "AzureCloud",

"homeTenantId": "**************",

"id": "***************",

"isDefault": true,

"managedByTenants": [],

"name": "Customer.com",

"state": "Enabled",

"tenantId": "*************",

"user": {

"name": "pipo@customer.com",

"type": "user"

}

}

]

Create the Backup script

ANF backup script – anfBackup.sh

The script requires jq (a light-weight JSON parser) to be installed on the OS:

zypper in jq

...

The following 3 NEW packages are going to be installed:

jq libjq1 libonig4

...

..

Create the backup script

su - azacsnap

cd bin

vi anfBackup.sh

#!/bin/bash

export PATH=$PATH:$HOME/bin

if [ "$1" = "" ]; then

echo "$0 <backup_prefix> <primary_retention> <snapshot> <configfile>"

exit 1

fi

BACKUP_PREFIX=$1

PRIMARY_BACKUP_RETENTION=$2

SECONDARY_BACKUP_RETENTION=$((PRIMARY_BACKUP_RETENTION + 2))

SNAPSHOTNAME=$3

AZCONFIG=$4

LOCATION="eastus"

# parse azacsnap.json to get all data volumes and the required az netappfiles parameters

numDataVols=`jq '.database[].hana.anfStorage[].dataVolume | length' $AZCONFIG`

echo "Found $numDataVols data volume(s) in the azacsnap configuration."

for ((i=0; i<$numDataVols; i++)); do

accountName=`jq -r '.database[].hana.anfStorage[].dataVolume['$i'].accountName' $AZCONFIG`

volumeName=`jq -r '.database[].hana.anfStorage[].dataVolume['$i'].volume' $AZCONFIG`

poolName=`jq -r '.database[].hana.anfStorage[].dataVolume['$i'].poolName' $AZCONFIG`

rgName=`jq -r '.database[].hana.anfStorage[].dataVolume['$i'].resourceGroupName' $AZCONFIG`

anfBackupConfig=`jq -r '.database[].hana.anfStorage[].anfBackup' $AZCONFIG`

backupName=$SNAPSHOTNAME

if [ "$anfBackupConfig" = "renameOnly" ]; then

volumeNameLc=$(echo $volumeName | tr '[:upper:]' '[:lower:]')

backupName="${SNAPSHOTNAME}__${volumeNameLc}"

fi

# create manual ANF backup

echo "Triggering manual ANF backup of snapshot $backupName in $volumeName volume..."

az netappfiles volume backup create --account-name $accountName --backup-name $backupName --location $LOCATION --name $volumeName --pool-name $poolName --resource-group $rgName --use-existing-snapshot true

rc=$?

if [ $rc -gt 0 ]; then

exit $rc

fi

done

exit 0

set the executable rights to the file

chmod 755 anfBackup.sh

Do a test Backup

List the volumes

az netappfiles volume list --resource-group ralf_rg --account-name ralf**** --pool-name ralfanfprem |grep name

"name": "ralfanfeastus/ralfanfprem/ralfANAbackup",

"name": "ralfanfeastus/ralfanfprem/ralfANAshared",

"name": "ralfanfeastus/ralfanfprem/ralfvol01",

List the snapshots of a volume

az netappfiles snapshot list --resource-group ralf_rg --account-name ralf*** --pool-name ralfanfultra --volume-name ralfANAdata |grep name

"name": "ralf**/ralfanfultra/ralfANAdata/Test__F38A83C2CCF",

"name": "ralf**/ralfanfultra/ralfANAdata/Test__F38A9164FDA",

Test the backup script with an previously created snapshot

./anfBackup.sh Test 3 Test__F38A9164FDA azacsnap.json

Found 1 data volume(s) in the azacsnap configuration.

Triggering manual ANF backup of snapshot Test__F38A9164FDA in ralfANAdata volume...

Command group 'netappfiles volume backup' is in preview and under development. Reference and support levels: https://aka.ms/CLI_refstatus

{

"backupId": "775e6c77-f5b3-3718-825d-abb7c5ffc483",

"backupType": "Manual",

"creationDate": "0001-01-01T00:00:00+00:00",

"etag": "1/13/2023 9:44:15 AM",

"failureReason": "None",

"id": "/subscriptions/***/resourceGroups/ralf_rg/providers/Microsoft.NetApp/netAppAccounts/ralf***/capacityPools/ralfanfultra/volumes/ralfANAdata/backups/Test__F38A9164FDA",

"label": null,

"location": "",

"name": "ralf**/ralfanfultra/ralfANAdata/Test__F38A9164FDA",

"provisioningState": "Creating",

"resourceGroup": "ralf_rg",

"size": 0,

"type": "Microsoft.NetApp/netAppAccounts/capacityPools/volumes/backups",

"useExistingSnapshot": true,

"volumeName": null

}

Finished

Integrate anfBackup.sh script with azacsnap

The azacsnap –runAfter feature is being used to execute anfBackup.sh automatically after a local snapshot backup has been created.

https://learn.microsoft.com/en-us/azure/azure-netapp-files/azacsnap-cmd-ref-runbefore-runafter

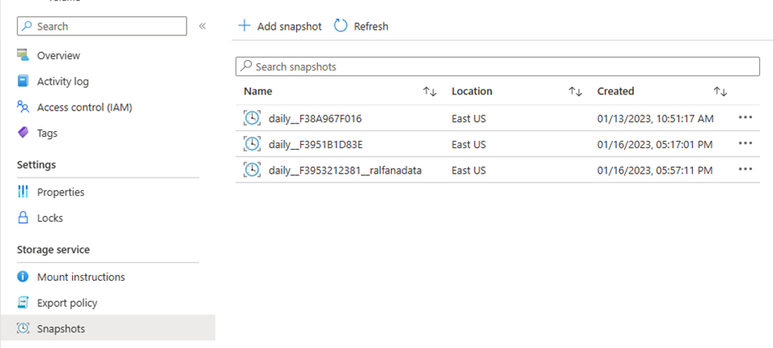

azacsnap -c backup --volume data --prefix daily --retention 2 --trim -vv --runafter 'env; ./anfBackup.sh $azPrefix $azRetention $azSnapshotName $azConfigFileName'%120

Schedule regular backups via crontab

Make sure the SAP HANA client (hdbsql) is part of the search path ($PATH), e.g. modify the entry (if not already done when installing azacsnap) in .bashrc:

which hdbsql

/usr/sap/ANA/HDB00/exe/hdbsqlexport

PATH=$PATH:/usr/sap/ANA/HDB00/exe:/home/azacsnap/bin

Or if the client is separately installed:

PATH=$PATH:/hana/shared/ANA/hdbclient:/home/azacsnap/bin

For easier scheduling create a small wrapper script for the azacsnap execution (with installed hdbclient) the check path for a local installation can be removed.

su - azacsnap

cd bin

vi cron_backup_daily.sh

#!/bin/bash

BACKUP_PREFIX="daily"

PRIMARY_BACKUP_RETENTION=2

LOCATION="eastus"

## AZACSNAP - PRIMARY BACKUP ##

HANA_CLIENT_PATH=`find /hana/shared/ -name hdbclient -type d -print 2>/dev/null`

export PATH=$PATH:$HANA_CLIENT_PATH

echo "Executing primary backup with prefix $BACKUP_PREFIX (retention ${PRIMARY_BACKUP_RETENTION})."

bin/azacsnap -c backup --volume data --prefix daily --retention 2 --trim -v --runafter 'env; ./anfBackup.sh $azPrefix $azRetention $azSnapshotName $azConfigFileName'%120

Use this script to schedule daily backups via cron, e.g. twice a day:

crontab -l

# create daily backups twice a day and replicate them via ANF backup

0 2,14 * * * /home/azacsnap/bin/cron_backup_daily.sh

Special preview function – HANA ScaleOut with azacsnap

When taking snapshots with AzAcSnap on multiple volumes all the snapshots have the same name by default. Due to the removal of the Volume name from the resource ID hierarchy when the snapshot is archived into Azure NetApp Files Backup it's necessary to ensure the Snapshot name is unique. AzAcSnap can do this automatically when it creates the Snapshot by appending the Volume name to the normal snapshot name. For example, for a system with two data volumes (hanadata01, hanadata02) when doing a -c backup with --prefix daily the complete snapshot names become daily__F2AFDF98703__hanadata01 and daily__F2AFDF98703__hanadata02.

Details on ANF Backup is in the Preview documentation here: Preview features for Azure Application Consistent Snapshot tool for Azure NetApp Files | Microsoft Learn

Extract for ANF Backup as follows:

|

Note Support for Azure NetApp Files Backup is a Preview feature. |

This can be enabled in AzAcSnap by setting "anfBackup": "renameOnly" in the configuration file, see the following snippet:

OutputCopy

"anfStorage": [

{

"anfBackup" : "renameOnly",

"dataVolume": [

This can also be done using the

azacsnap -c configure --configuration edit --configfile <configfilename>

and when asked to Enter new value for

'ANF Backup (none, renameOnly)' (current = 'none'):

enter

renameOnly

as long as this feature is in preview you need to add --preview to all azacsnap command calls in the scripts.

Once the feature is enabled the volume name becomes part of the snapshot name.

Housekeeping of ANF backups

The ANF backup needs little script which will remove the old backups from the ANF Backup blob space. To have a easy cleanup method from the HANA server we created this script.

cat anfBackupCleanup.sh

#!/bin/bash

echo "$0 <backup_prefix> <secondary_retention> <configfile>"

exit 1

fi

BACKUP_PREFIX=$1

SECONDARY_BACKUP_RETENTION=$2

AZCONFIG=$3

LOCATION="eastus"

## ANF BACKUP - RETENTION MANAGEMENT ##

# parse azacsnap.json to get all data volumes and the required az netappfiles parameters

numDataVols=`jq '.database[].hana.anfStorage[].dataVolume | length' $AZCONFIG`

echo "Found $numDataVols data volume(s) in the azacsnap configuration."

for (( i=0; i<$numDataVols; i++ )); do

accountName=`jq -r ".database[].hana.anfStorage[].dataVolume[$i].accountName" $AZCONFIG`

volumeName=`jq -r ".database[].hana.anfStorage[].dataVolume[$i].volume" $AZCONFIG`

poolName=`jq -r ".database[].hana.anfStorage[].dataVolume[$i].poolName" $AZCONFIG`

rgName=`jq -r ".database[].hana.anfStorage[].dataVolume[$i].resourceGroupName" $AZCONFIG`

## retention management

#backupList=`az netappfiles volume backup list -g $rgName --account-name $accountName --pool-name $poolName --name $volumeName | jq -r '.[].id' | grep "$BACKUP_PREFIX" | sort`

backupList=`az netappfiles volume backup list -g $rgName --account-name $accountName --pool-name $poolName --name $volumeName | jq -r '. |= sort_by(.creationDate) | .[].id' | grep "$BACKUP_PREFIX"`

echo "Found $numBackups ANF backups with prefix $BACKUP_PREFIX for volume $volumeName. Configured max retention is $SECONDARY_BACKUP_RETENTION."

if [ $numBackups -gt $SECONDARY_BACKUP_RETENTION ]; then

numBackupsToDel=$(($numBackups-$SECONDARY_BACKUP_RETENTION))

c=0

for backupId in $backupList; do

if [ $c -lt $numBackupsToDel ]; then

echo "Deleting backup $backupId..."

az netappfiles volume backup delete --ids $backupId

c=$(($c+1))

fi

done

fi

done

exit 0

chmod 755 ./anfBackupCleanup.sh

Test the script

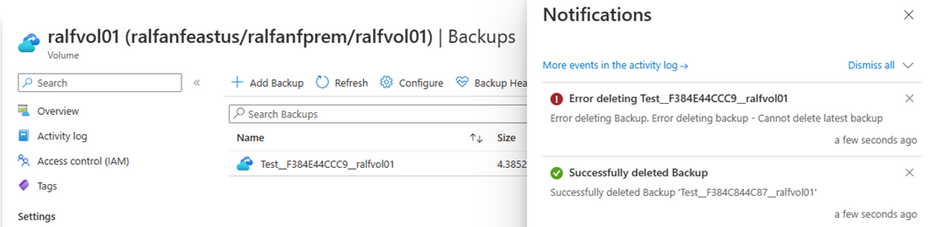

./anfBackupCleanup.sh Test 1 azacsnap.json

Found 1 data volume(s) in the azacsnap configuration.

WARNING: Command group 'netappfiles volume backup' is in preview and under development. Reference and support levels: https://aka.ms/CLI_refstatus

Found 2 ANF backups with prefix Test for volume ralfANAdata. Configured max retention is 1.

Deleting backup /subscriptions/******/resourceGroups/ralf_rg/providers/Microsoft.NetApp/netAppAccounts/ralf*****/capacityPools/ralfanfultra/volumes/ralfANAdata/backups/Test__F38A83C2CCF...

Command group 'netappfiles volume backup' is in preview and under development. Reference and support levels: https://aka.ms/CLI_refstatus

| Running ..

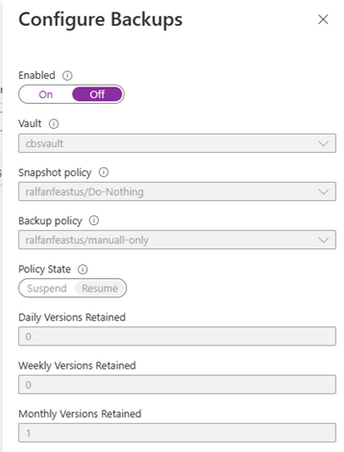

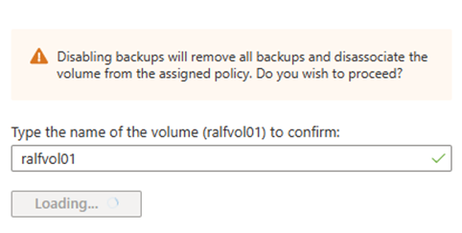

Disabling ANF Backup

If you have a desire to disable and delete all backups it is important to know that the last backup is the baseline copy of the volume and cannot be deleted without unconfigure ANF Backup for this volume.

The “Error” actually it works as designed.

You first need to disable ANF Backup before you can delete the last backup.

Now all backups are deleted and the SnapMirror relationship is removed.

Log Backup to Azure Blob

Since we “only” can schedule 5 backups for an ANF volume it makes sense to think about a different way to backup the database logfiles. The time between two log-file backups also defines the recovery RPO (recovery point objective = data loss). For the database log backup, we would like to see a much shorter interval for the backups. Here we will show how we can use azcopy to do exactly this.

Install and setup the azcopy tool

Reference https://docs.microsoft.com/en-us/azure/storage/common/storage-use-azcopy-v10

su – azacsnap

cd bin

wget -O azcopy_v10.tar.gz https://aka.ms/downloadazcopy-v10-linux && tar -xf azcopy_v10.tar.gz --strip-components=1

azcopy -v

azcopy version 10.16.2

Configure the HANA log and data backup location

To make use of our created ANF backup Volume we need to change the data and log backup location in HANA. The default location for the backups is /hana/shared this is usually not what we want.

But first we create the backup directories in the ANF backup volume.

su - anaadm

cd /hana/backup/ANA

df -h .

Filesystem Size Used Avail Use% Mounted on

10.4.6.6:/ralfANAbackup 2.6T 256K 2.6T 1% /hana/backup/ANA

pwd

/hana/backup/ANA

mkdir data log

ls -l

drwxr-x--- 2 anaadm sapsys 4096 Jan 13 10:57 data

drwxr-x--- 2 anaadm sapsys 4096 Jan 13 10:57 log

Now setup the backup destinations in HANA studio.

You also might consider to reduce the time for the log backup. This will also shorten the RPO because the log backup function is called much mor often and we can save the logs to the Azure Blob space more frequently.

The default is 900sec. (15min), I think 300 (5min) is a good value.

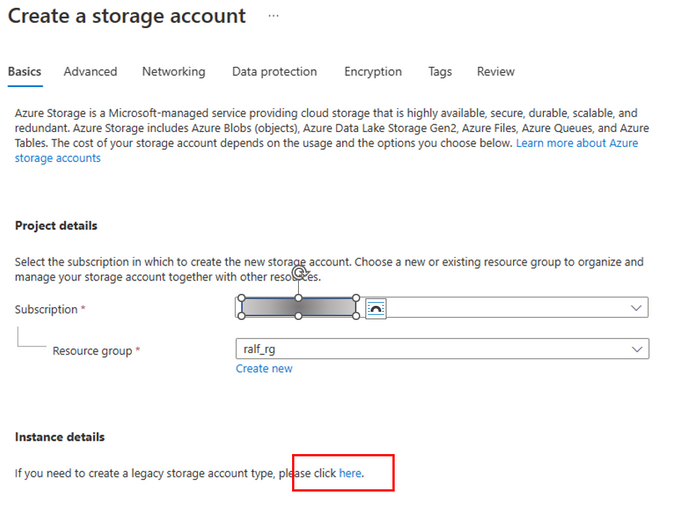

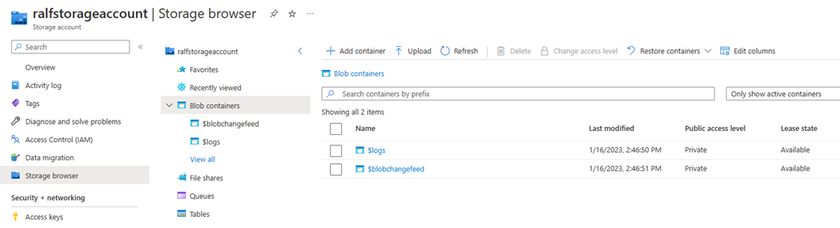

Create a Storage Account

click on "here" to create a legacy storage account (this is what we want)

Create a “normal” v2 storage account. For more performance you can use Premium

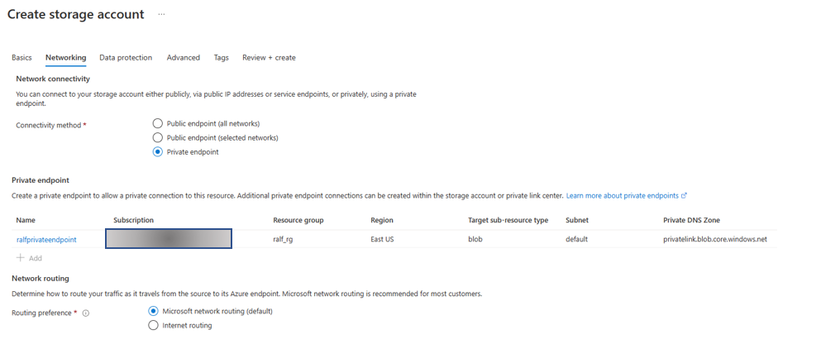

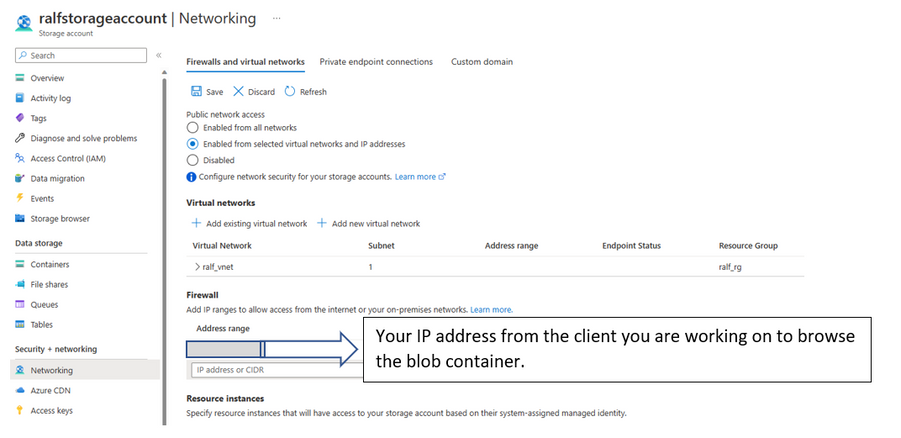

We highly recommend allowing only internal access to the blob space. For this, we create a private endpoint in the same Azure default subnet.

click on +ADD to create the private endpoint

Those settings are individual. I think we would like to set soft delete and other retention periods.

I disabled the public access.

Press review and then create the storage account.

Adopt the network settings

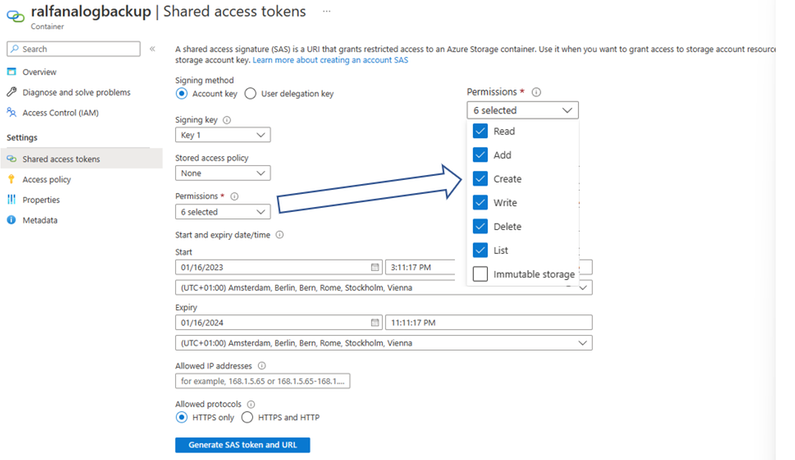

Create an SAS access token to access the storage.

The blob container should be now in the same IP Subnet as the VM – AND not publicly reachable.

Test if you can write into this container.

Upload files to Azure Blob storage by using AzCopy v10 | Microsoft Learn

azcopy copy "azacsnap.json" "https://ralfstorageaccount.blob.core.windows.net/ralfanalogbackup?sp=racwdl&st=2023-01-16T14:11:17Z&se=2024-01-16T22:11:17Z&spr=https&sv=2021-06-08&sr=c&si****************ks%3D"

INFO: Scanning...

INFO: Any empty folders will not be processed, because source and/or destination doesn't have full folder support

Job 9d201f0f-61a1-f54e-5045-b7913d7ef162 has started

Log file is located at: /home/azacsnap/.azcopy/9d201f0f-61a1-f54e-5045-b7913d7ef162.log

100.0 %, 1 Done, 0 Failed, 0 Pending, 0 Skipped, 1 Total, 2-sec Throughput (Mb/s): 0.0069

Job 9d201f0f-61a1-f54e-5045-b7913d7ef162 summary

Elapsed Time (Minutes): 0.0333

Number of File Transfers: 1

Number of Folder Property Transfers: 0

Total Number of Transfers: 1

Number of Transfers Completed: 1

Number of Transfers Failed: 0

Number of Transfers Skipped: 0

TotalBytesTransferred: 1735

Final Job Status: Completed

You should be able to see the file with the data browser.

Logbackup with azcopy

Now create a little script which will copy the log backup folder to this container.

su – azacsnap

cd bin

vi logbackup.sh

#!/bin/bash

DATE="`date +%m-%d-%Y`"

# The SID of the Database

SID=ANA

#This Folder/Directory will be backed up to the Azure Blob Container

BACKUPFOLDER=/hana/backup/"${SID}"/log

# The SAS Access Key

CONTAINER_SAS_KEY="https://ralfstorageaccount.blob.core.windows.net/ralfanalogbackup?sp=racwdl&st=2023-01-16T14:11:17Z&se=2024-01-16T22:11:17Z&spr=https&sv=2021-06-08&sr=c&sig=xz************ks%3D"

#The Logfile

LOGFILE="/tmp/LogBackup_${SID}.log"

# MAX LOGFILE SIZE is 2 MB

MAXSIZE=200000

# Get file size

FILESIZE=$(stat -c%s "$LOGFILE")

echo "Size of $LOGFILE = $FILESIZE bytes." >>$LOGFILE

if (( $FILESIZE > $MAXSIZE)); then

echo "moving the LogBackup logfile to a backup" >>$LOGFILE

mv $LOGFILE ${LOGFILE}-${DATE}

else

echo "Logfilesize OK" >>$LOGFILE

fi

echo "`date` -- Copy the log backup files to the blob Space" >>$LOGFILE

/home/azacsnap/bin/azcopy copy ${BACKUPFOLDER} ${CONTAINER_SAS_KEY} --recursive=true >>$LOGFILE

printf "\nReturn-Code=$? \n\n" >>$LOGFILE

chmod 755 logbackup.sh

The Logfile is located in /tmp

cat /tmp/LogBackup_ANA.log

on Jan 16 15:51:47 UTC 2023 -- Copy the log backup files to the blob Space

INFO: Scanning...

INFO: Any empty folders will not be processed, because source and/or destination doesn't have full folder support

Job 719fdfbe-f12f-714f-6684-9b8916ca0fea has started

Log file is located at: /home/azacsnap/.azcopy/719fdfbe-f12f-714f-6684-9b8916ca0fea.log

100.0 %, 5526 Done, 0 Failed, 0 Pending, 0 Skipped, 5526 Total, 2-sec Throughput (Mb/s): 3848.8537

Job 719fdfbe-f12f-714f-6684-9b8916ca0fea summary

Elapsed Time (Minutes): 0.0667

Number of File Transfers: 5526

Number of Folder Property Transfers: 0

Total Number of Transfers: 5526

Number of Transfers Completed: 5526

Number of Transfers Failed: 0

Number of Transfers Skipped: 0

TotalBytesTransferred: 3078959104

Final Job Status: Completed

Return-Code=0

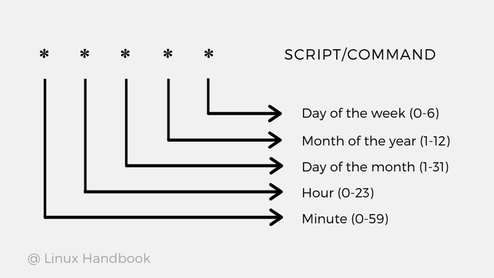

Add the log backup to the crontab

Use this script to schedule daily backups via cron, e.g. twice a day:

crontab -e

# create daily backups twice a day and replicate them via ANF backup

0 2,14 * * * /home/azacsnap/bin/cron_backup_daily.sh

# create a log backup to the blob container every 5 minutes

*/5 * * * * /home/azacsnap/bin/logbackup.sh

Information about crontab

As you can see, the crontab syntax has 5 asterisks. Here’s what each of those asterisks represent:

Restore data from Blob

In the worst case we need to download logfiles from the Azure Blob space. You simply need to “revert the azcopy copy command.

Download blobs from Azure Blob Storage by using AzCopy v10 | Microsoft Learn

Copy a file to Azure blob

azcopy copy "azacsnap.json" "https://ralfstorageaccount.blob.core.windows.net/ralfanalogbackup?sp=racwdl&st=2023-01-16T14:11:17Z&se=2024-01-16T22:11:17Z&spr=https&sv=2021-06-08&sr=c&********ZXK7IeRSRwUvks%3D"

restore this file back to the /tmp directory

azcopy copy "https://ralfstorageaccount.blob.core.windows.net/ralfanalogbackup/azacsnap.json?sp=racwdl&st=2023-01-16T14:11:17Z&se=2024-01-16T22:11:17Z&spr=https&sv=2021-06-08&sr=c**%3D" "/tmp/azacsnap.json"

INFO: Scanning...

INFO: Any empty folders will not be processed, because source and/or destination doesn't have full folder support

Check

ls -l /tmp/azacsna*

-rw-r--r-- 1 azacsnap users 1741 Jan 17 09:25 /tmp/azacsnap.json

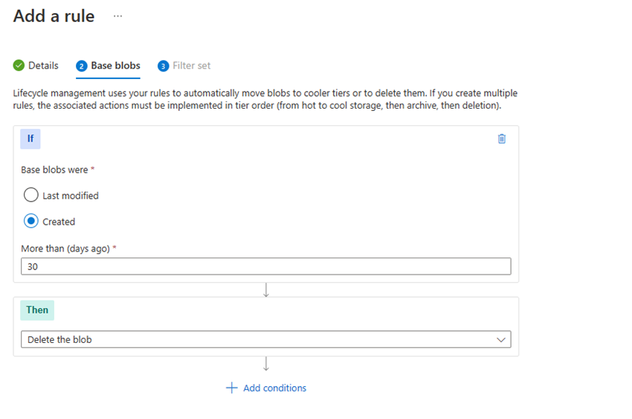

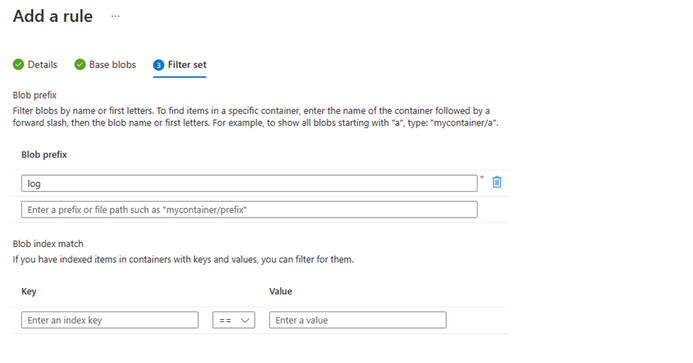

Managing Blob retention times

To automatically remove the logfiles from the blob space you can create a delete policy where you can specify the exact number of days where you keep the database logfiles on the blob space.

Create a lifecycle rule.

Add a rule

End